Introduction to Neural Networks: The Backbone of Deep Learning

Explore the fundamentals of neural networks, the core technology driving deep learning, artificial intelligence, and modern machine learning applications.

Table of Contents

- Introduction

- What Exactly *Is* a Neural Network?

- How Neural Networks Learn: The Training Process

- Key Components: Neurons, Layers, and Activation Functions

- Types of Neural Networks: A Quick Overview

- Real-World Applications: Where Do We See Them?

- The Relationship with Deep Learning

- Challenges and Future Directions

- Conclusion

- FAQs

Introduction

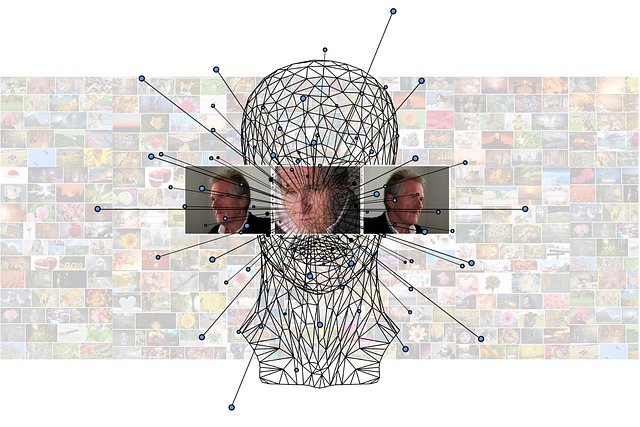

Ever wondered how your phone unlocks just by looking at your face? Or how Netflix seems to know exactly what movie you’ll want to watch next? The magic behind many of these sophisticated technologies lies in something called artificial neural networks. This article provides an Introduction to Neural Networks, aiming to demystify these powerful tools often hailed as the backbone of deep learning. Forget impenetrable jargon; we're going to explore this fascinating world in a way that makes sense, using everyday examples and focusing on the core concepts.

Neural networks are computational models inspired by the intricate structure and function of the human brain. They don't replicate the brain exactly (we're still a long way from that!), but they borrow the fundamental idea of interconnected processing units – neurons – working together to learn patterns, make decisions, and solve complex problems. From filtering spam emails to enabling self-driving cars, neural networks are quietly revolutionizing industries and reshaping our interaction with technology. Understanding them isn't just for computer scientists anymore; it’s becoming essential knowledge in our increasingly AI-driven world. So, let's peel back the layers and see what makes these networks tick.

What Exactly *Is* a Neural Network?

Okay, let's break it down. At its heart, an artificial neural network (ANN) is a system of hardware and/or software patterned after the operation of neurons in the human brain. Think of it less like a literal brain simulation and more like a mathematical framework inspired by biological principles. Instead of biological neurons firing electrical signals, ANNs have interconnected nodes or 'neurons' organized in layers. These artificial neurons receive input signals, process them, and then pass output signals to other neurons connected to them. It’s a bit like a vast, digital game of telephone, but much, much smarter.

Imagine you're trying to identify whether a picture contains a cat or a dog. A simple program might struggle with the variations – different breeds, angles, lighting. A neural network approaches this differently. The first layer of neurons might receive the raw pixel data from the image. Each neuron processes a small piece of this data and passes its result to the next layer. This next layer might start identifying basic features like edges or corners. Subsequent layers combine these features to recognize more complex elements like ears, tails, or fur patterns. Finally, the output layer weighs all this information to make a prediction: "cat" or "dog." The strength of the connections (called 'weights') between these neurons determines how much influence one neuron's output has on another, and it's these weights that get adjusted during the learning process.

So, rather than being explicitly programmed with rules for "cat" vs. "dog," the network learns these rules by analyzing vast amounts of labelled data (pictures already identified as cats or dogs). As Dr. Geoffrey Hinton, a pioneer in the field, suggests, the power lies not in predefined rules but in the network's ability to adapt and discover patterns autonomously. It’s this learning capability that makes them so versatile and powerful for tasks where the rules are too complex or subtle for humans to define explicitly.

How Neural Networks Learn: The Training Process

How does a network actually "learn"? It's not magic, but a rather clever mathematical process involving trial and error, refined over potentially millions of examples. The core idea revolves around minimizing the difference between the network's predictions and the actual correct answers. This process typically involves a few key steps, repeated iteratively: forward propagation, calculating the error (loss), backpropagation, and updating the connections (weights).

Think of learning to shoot a basketball. First, you take a shot (forward propagation) based on your current understanding of distance, angle, and force. You see how far off the mark you were – maybe the ball went too far left and too high (calculating the loss). Now, you mentally adjust. You think, "Okay, I need to aim slightly right and use a bit less force." This adjustment process, figuring out how your initial actions contributed to the error and how to correct them, is akin to backpropagation. Finally, you internalize this correction for your next shot (updating the weights/connections). Neural networks do something similar, but with complex math (calculus, specifically gradient descent) to make these adjustments systematically across thousands or millions of connections.

The network is fed vast amounts of training data – inputs paired with their correct outputs (e.g., images of cats labelled "cat"). It makes a prediction for each input, compares it to the correct label using a 'loss function' (which quantifies the error), and then uses backpropagation to trace the error backward through the network, determining how much each connection weight contributed to that error. These weights are then adjusted slightly in a direction that should reduce the error next time. Repeat this millions of times, and voilà! The network gradually becomes better and better at its assigned task.

- Forward Propagation: Input data flows through the network, layer by layer, generating an initial prediction or output based on the current weights.

- Loss Function: A mathematical function that measures the discrepancy (the error or 'loss') between the network's prediction and the actual target value.

- Backpropagation: An algorithm that calculates the gradient of the loss function with respect to each weight in the network. Essentially, it figures out how much each connection contributed to the overall error.

- Weight Adjustment (Optimization): Using an optimization algorithm like Gradient Descent, the weights are updated in the opposite direction of the gradient, aiming to minimize the loss function on subsequent iterations.

Key Components: Neurons, Layers, and Activation Functions

To truly grasp neural networks, we need to understand their fundamental building blocks. At the most basic level, we have the artificial neuron (or node). Each neuron receives one or more input signals, which are essentially numerical values. These inputs are multiplied by corresponding 'weights' – numbers representing the strength or importance of each connection. The weighted inputs are then summed up, often along with a 'bias' term (another learnable parameter that helps shift the output). This sum is then passed through an activation function.

What's an activation function? Think of it as a gatekeeper or a squashing function. It introduces non-linearity into the neuron's output. Without non-linearity, no matter how many layers you stack, the entire network would behave like a simple linear model, severely limiting its ability to learn complex patterns. Common activation functions include the Sigmoid (which squashes values between 0 and 1), Tanh (squashing between -1 and 1), and the widely used ReLU (Rectified Linear Unit), which outputs the input directly if it's positive and zero otherwise. The choice of activation function can significantly impact the network's performance and training dynamics.

These neurons aren't just scattered randomly; they are organized into layers. Every neural network has at least an Input Layer (which receives the raw data) and an Output Layer (which produces the final prediction or classification). Between these, there can be one or more Hidden Layers. It's these hidden layers that do the heavy lifting, transforming the input data through successive stages of computation and feature extraction. Networks with many hidden layers are what we typically refer to when we talk about "deep learning." The way these layers are connected – how information flows between them – defines the network's architecture and its suitability for different tasks.

Types of Neural Networks: A Quick Overview

Just as there isn't one tool for every job, there isn't just one type of neural network. Different architectures have evolved, each tailored to excel at specific kinds of problems. Understanding these variations gives you a better sense of the field's breadth and the specialized tools available for different data types and tasks. While there are many specialized architectures, a few common types form the foundation for much of the work done today.

The simplest form is the Feedforward Neural Network (FNN), where information flows strictly in one direction – from the input layer, through any hidden layers, to the output layer, without any loops or cycles. These are great for many basic classification and regression tasks, like predicting house prices based on features or classifying handwritten digits (like the classic MNIST dataset). However, they don't have any memory of past inputs, which limits their use for sequential data.

For tasks involving grid-like data, particularly images, Convolutional Neural Networks (CNNs) reign supreme. Inspired by the human visual cortex, CNNs use special layers called convolutional layers that apply filters (kernels) across the input image. This allows them to automatically learn spatial hierarchies of features – from simple edges in early layers to complex object parts in deeper layers. This makes them incredibly effective for image recognition, object detection, and even medical image analysis. Another crucial type is the Recurrent Neural Network (RNN). Unlike FNNs, RNNs have connections that form directed cycles, allowing them to maintain an internal state or 'memory'. This makes them well-suited for sequential data where context matters, such as natural language processing (understanding sentences), speech recognition, and time series forecasting.

- Feedforward Neural Networks (FNNs): Data flows in one direction (input to output). Good for basic tabular data, simple classification/regression. Think basic pattern recognition.

- Convolutional Neural Networks (CNNs): Specialized for grid-like data (e.g., images). Use convolutional layers and pooling to learn spatial hierarchies. Dominant in computer vision.

- Recurrent Neural Networks (RNNs): Designed for sequential data (e.g., text, time series). Have internal memory loops allowing persistence of information. Used in NLP, speech recognition. Variants include LSTMs and GRUs which handle long-range dependencies better.

- Transformers: A more recent architecture, initially developed for NLP tasks like machine translation. Uses self-attention mechanisms to weigh the importance of different input parts. Now foundational for models like ChatGPT and BERT, also finding use in computer vision.

Real-World Applications: Where Do We See Them?

Neural networks aren't just theoretical concepts confined to research labs; they are already deeply embedded in the technology we use every single day. Sometimes their presence is obvious, but often it's working subtly behind the scenes. Recognizing these applications helps appreciate the practical impact of this technology. Have you ever used Google Translate? That's sophisticated neural networks (specifically, Transformer models these days) working to understand context and provide remarkably accurate translations between languages.

Computer vision is perhaps one of the most prominent areas. When you upload photos to Facebook or Google Photos and it automatically suggests tagging your friends, that's CNNs identifying faces. Security systems use them for facial recognition. Autonomous vehicles rely heavily on neural networks to interpret data from cameras, LiDAR, and radar to perceive their surroundings – identifying pedestrians, other cars, traffic lights, and lane markings. Recommendation engines on platforms like Amazon, Spotify, and Netflix use neural networks (often combined with other techniques) to analyze your past behavior (what you've bought, watched, or listened to) and predict what else you might like. This personalized experience is driven by the network's ability to find complex patterns in user data.

Beyond these, neural networks are making strides in healthcare, aiding doctors in diagnosing diseases like cancer from medical images (X-rays, MRIs) with impressive accuracy. They're used in financial modeling for fraud detection and algorithmic trading. In scientific research, they help analyze complex data from experiments in physics, biology, and astronomy. Even creative fields are being touched, with networks generating art, music, and text. The list keeps growing as researchers find new ways to apply their pattern-recognition prowess.

The Relationship with Deep Learning

You often hear "neural networks" and "deep learning" mentioned together, sometimes almost interchangeably. So, what's the precise connection? Is deep learning just a fancy rebranding? Not quite. Think of Artificial Intelligence (AI) as the broadest concept – machines exhibiting intelligent behavior. Within AI, there's Machine Learning (ML), which focuses on systems that learn from data without being explicitly programmed. Neural networks are a specific type of machine learning model, inspired by the brain's structure.

Deep Learning, then, is essentially a subfield of machine learning that utilizes specific kinds of neural networks – namely, those with multiple hidden layers (hence, "deep"). While early neural networks often had only one or maybe two hidden layers (sometimes called "shallow" networks), the breakthroughs in recent years have involved architectures with tens, hundreds, or even thousands of layers. Why does depth matter? Each layer learns to represent the data at a different level of abstraction. For example, in image recognition, early layers might detect simple edges, mid-layers might combine edges to form shapes or textures, and later layers might recognize complex objects like faces or cars.

This ability to learn hierarchical features automatically is what gives deep learning models their power, allowing them to tackle extremely complex problems like natural language understanding, realistic image generation, and intricate game-playing (think AlphaGo). The availability of massive datasets (Big Data) and powerful computing hardware (especially GPUs) has been crucial in enabling the training of these very deep networks. So, while all deep learning involves neural networks, not all neural networks qualify as "deep." Deep learning represents the cutting edge, leveraging the layered structure of neural networks to achieve unprecedented performance on challenging tasks.

Challenges and Future Directions

Despite their incredible successes, neural networks and deep learning aren't without their challenges and limitations. One major hurdle is the need for vast amounts of labelled training data. Training state-of-the-art models often requires datasets far larger than what's available for many specific problems, and creating labelled data can be expensive and time-consuming. Techniques like transfer learning (reusing parts of pre-trained models) and unsupervised learning are helping, but data hunger remains a significant issue.

Another challenge is the computational cost. Training large, deep networks demands substantial processing power, often relying on specialized hardware like GPUs or TPUs, which consumes significant energy and can be costly. Furthermore, many deep learning models function as "black boxes." While they might make incredibly accurate predictions, understanding why they made a specific decision can be difficult. This lack of interpretability is a major concern in high-stakes applications like medical diagnosis or loan applications, where transparency and accountability are crucial. The field of Explainable AI (XAI) is actively working on methods to make these models more understandable.

Looking ahead, the future of neural networks holds exciting possibilities. Research continues into more efficient architectures, better training algorithms, and techniques requiring less data. Neuromorphic computing, which aims to build hardware that mimics the brain's structure and low-power operation more directly, is a promising avenue. We're also likely to see continued progress in areas like reinforcement learning, generative models (creating realistic images, text, and audio), and the integration of neural networks with symbolic reasoning approaches. Addressing the ethical considerations surrounding bias in data, fairness in algorithmic decision-making, and the societal impact of AI will also be paramount as these powerful technologies become even more pervasive.

Conclusion

From recognizing your face to translating languages on the fly, neural networks have moved from the realm of science fiction into the fabric of our daily digital lives. As we've journeyed through this Introduction to Neural Networks, we've seen how these brain-inspired computational models, with their interconnected neurons and layered architectures, learn from data through processes like backpropagation. They are not just mathematical curiosities; they are the engine driving the deep learning revolution, enabling breakthroughs in computer vision, natural language processing, and countless other domains.

While challenges like data requirements, computational cost, and interpretability remain, the pace of innovation is relentless. Understanding the fundamentals – what neural networks are, how they learn, and where they're applied – provides a crucial lens through which to view the future of technology. They represent a powerful paradigm shift in computing, moving from explicitly programmed instructions to systems that learn and adapt. As the backbone of deep learning, neural networks will undoubtedly continue to shape our world in profound ways for years to come.

FAQs

What's the difference between AI, Machine Learning, and Neural Networks?

Think of them as nested concepts. Artificial Intelligence (AI) is the broad field of creating intelligent machines. Machine Learning (ML) is a subset of AI where systems learn from data. Neural Networks are a specific type of ML model inspired by the brain, often used for complex pattern recognition. Deep Learning uses neural networks with many layers.

Are neural networks really like human brains?

They are inspired by the brain but are vastly simplified. Artificial neurons and layers mimic the interconnected structure, but they don't replicate the biological complexity, chemistry, or consciousness of a real brain. It's more of a useful analogy and mathematical framework.

Do I need advanced math to understand neural networks?

To understand the core concepts (like neurons, layers, learning from examples), you don't need deep math. However, to understand the mechanics of training (like backpropagation and gradient descent) or to implement them yourself, knowledge of linear algebra, calculus, and probability is very helpful.

What is 'overfitting' in neural networks?

Overfitting happens when a neural network learns the training data too well, including its noise and specific quirks. As a result, it performs excellently on the data it was trained on but poorly on new, unseen data. It fails to generalize. Techniques like regularization, dropout, and using validation sets help prevent overfitting.

How much data do neural networks need?

It varies greatly depending on the complexity of the task and the network architecture. Simple tasks might require thousands of examples, while complex deep learning models (like those for image recognition or language translation) are often trained on millions or even billions of data points.

Can neural networks make mistakes?

Absolutely. No model is perfect. Neural networks can make errors due to various reasons: insufficient or biased training data, limitations of the model architecture, overfitting, or encountering data very different from what they were trained on. Evaluating their performance and understanding their limitations is crucial.

What programming languages are used for neural networks?

Python is overwhelmingly the most popular language, thanks to powerful libraries like TensorFlow, PyTorch, and Keras, which simplify the process of building and training neural networks. Other languages like R, Java, and C++ also have libraries, but Python dominates the research and development landscape.